I recently attended the Augmented World Expo (AWE) in Santa Clara with CrossComm’s Senior AR/VR Developer, Mike Harris. This was our 3rd year going. After attending this year, we captured our thoughts, discoveries, and predictions in the below conversation. In it, we highlight some of the most impressive demonstrations of immersive tech that we saw, and consider questions around consumer adoption, privacy, and property rights to augmented space. We also hypothesize on what AWE 2019’s audience makeup and size (coupled with Apple’s recent announcements at their WWDC conference) suggest about the changing landscape of immersive tech.

Top Takeaways from AWE 2019

Don: Hey, Mike. Thanks for joining me in this conversation around the AWE conference that we recently attended. I’d love to dig in with you into the details of our experience, and share with our community in the process. To kick off this conversation with a question:

What is the most significant thing that you experienced at AWE this year?

Mike: The thing that I was the most surprised by was how quickly things are advancing in terms of the actual technology that's required to have head-mounted AR that people can wear on an everyday basis. I saw several companies showing waveguides that were really impressive in terms of their brightness and resolution. There was a presentation by Qualcomm for the 5G chipset that they’re going to be using—they were talking about how it will allow for edge computing for AR experiences—both in terms of tracking and delivering the content to the glasses. So it was very much on everyone's mind. It seemed a lot closer than I expected it to be, especially given our experiences at the conference the previous year, which I think was right after the Magic Leap was announced, if that’s correct?

Don: Yeah, I think so. I would agree with that general sense that this seemed to be the first AWE where I finally got the sense that we’d begun to solve the hardware problem that is inhibiting the practical mass adoption of AR and VR wearables. For example, wearables like the Oculus Quest; those Nreal smart glasses that looked just like a pair of glasses, but with a pretty impressive display and fairly small form factor; as well as all the countless Chinese headsets that we saw over at CES and again at AWE. There is definitely a trend towards the commoditization of hardware, and that's probably a good thing for finally getting mass adoption underway.

Don sports the NReal smartglasses at AWE 2019

Mike: Yeah, the one thing that popped into my head that I hadn't thought before—seeing the Nreal glasses and the many Chinese companies that are producing various headsets—is that there's going to be some bad AR experiences as well. 6DOF tracking and waveguides are something that a lot of companies will have access to, and there’ll be better and worse implementations of it. So it was the first time I was really concerned about consumer interactions with headworn AR, and what people's first interactions are going to be like. How are we going to be able to communicate to people what they should expect from these experiences so they can use that information as a touchstone when they’re trying out and assessing these different devices? Hopefully it’s not just a race for the cheapest type of device that then degrades the AR experience overall.

Don: Yeah. It’s good for us to remember, as devs, that of course we're excited about any technological progress, but in order for this to be part of our everyday lives, it’s going to have to just work, and that’s not where a lot of these devices are at currently.

Mike: And that's something that we saw in VR as well. For a lot of people, their 1st VR experiences were with Google Cardboard or Gear VR when people were just figuring out the basics of locomotion or comfort in VR. So they may have had a negative experience—perhaps with its low resolution or some other factor . Even to this day, when I ask people if they’ve tried VR, most people haven't. For those who have, it's usually with a Google cardboard, or something similar—and that sets their expectation for what VR is like. It made me realize that a very similar pattern might arise in AR as these devices become more widely used.

What was your favorite booth exhibit and why?

Mike: InnerOptic was my favorite exhibit because it was a brilliant implementation of AR—being able to visualize an ultrasound in place. Ultrasounds are three-dimensional objects sliced and abstracted to two dimensions—whenever I see one on a monitor I have no idea what's going on in terms of the object being scanned. Inner Optic used a HoloLens in conjunction with an ultrasound wand so when you looked at the ultrasound wand you saw an AR projection of the 2D ultrasound as a flat surface spatially oriented with the orientation of the wand. I found that to be a really powerful example of how AR can improve healthcare outcomes.

Don: For sure. I'm going to cheat a bit and mention two booths that were memorable experiences that stuck with me. The first was the booth for Looking Glass—when you and I had the opportunity to take a volumetric photo together and could see it within a matter of minutes inside of a looking glass unit. Seeing us kind of stuck in a three dimensional box was pretty awesome. Obviously there's a lot of room for the technology to grow and to improve, but I loved being able to see three dimensional volumetric images and content without having to put anything on my face like 3-D glasses.

A volumetric capture of Don and Mike in a Looking Glass display

Mike: Yeah, I was impressed by the Looking Glass as well. I remember when I first learned about it from the tweets of people who had been able to get their hands on one. Obviously the three-dimensionality of it doesn't translate when you just see two-dimensional images or videos, but I was really intrigued. Then, when we were able to get our own and experiment with it in-house, the potential became readily apparent.

So this year, at AWE, it was awesome being able to see the larger form factor of the 15” screen. It seemed like the content on that large of a screen had enough pixel density for you to really make out important features of 3-D objects being displayed. And then also having the touch capacitive property where you could directly interact with the screen by touching it — I thought that was really powerful.

Don: The other booth that was stuck in my mind was Varjo's booth. You had mentioned to me that it was almost like foreseeing the future of VR, and I agree with that. If we get to a point where our entire VR experience could be driven by that high density display, I think it's going to make virtual reality a lot more immersive and practical for business applications—especially if you're able to read fine text in the same way that simulates the everyday necessities of reading text in the workplace, or during training, or operating equipment, or so forth. It would also open the door to more sophisticated simulations and training opportunities.

Mike: Yeah. The demo that they had, which I'm sure was selected on purpose, was the inside of a cockpit with all the little dials and fine print labeling. It worked incredibly well. Since having done this latest round of VR for the past half a decade or so, it was one of those magic moments—which are becoming more and more far between these days—where you just look at a virtual object and the resolution is exactly as your retina is expecting. There's no screen door effect; your brain takes over and expects it to be reality because it doesn't have to adjust to a fidelity that you're not used to in everyday life. It was pretty impressive.

Don: Can you explain, for those who may not know, what the Varjo VR and MR headsets are all about?

Mike: Sure. The Varjo display takes a novel approach to getting a higher resolution display on a VR headset. It actually has two displays: one standard display, which I believe is around the same resolution as high end consumer headsets like the Vive Pro or the Rift S; and then a smaller micro display which has a density that I believe is at or around human eye resolution. The high resolution display is in the center of the user’s field of view, about the size of a VHS tape held at arm's length, and it has a blending between the two. So, while you're looking directly at the center of the display, you’re actually seeings things at incredibly high resolution. Since that's the only part of your field of vision that actually sees in high resolution, the entire image looks as though it would in real life in terms of pixel density (as long as you continue to look straight forward). As for the MR display (this is actually something that we had to set an appointment for in order to get a demo) they're using pass-through cameras—so taking a video feed from the outside world, passing it through the computer’s GPU, and rendering it in each eye so that it essentially looks like you're seeing directly through the headset—and then overlaying AR content on top of that. This is exactly like AR glasses but it's pass-through AR instead of an additive display. This was really impressive. I believe they were using a Lightning connection to run the video feed through the computer, which made it super low latency—the lowest latency for any pass-through VR that I've experienced. And it's also really high resolution, making use of those high resolution micro displays at the center of your field of view. So it was the closest I've ever seen to actually tricking your eyes into believing that the headset is actually transparent and you're just viewing AR content on top of the real world.

Mike tries out Varjo's pass-through MR device

Did you see anything that you did not expect or that surprised you?

Mike: I mentioned this previously, but the speed at which the technology is advancing was probably the biggest surprise for me. That and the number of new companies that are out there providing out-of-the-box solutions for enterprises to make their own AR experiences. It feels a little premature because we're still figuring out a lot of the fundamentals of XR UI and UX and how people are going to interact with this new type of computer interface, but obviously there's a lot of excitement in the market and there is a lot of interest from across various verticals in incorporating AR into various workflows.

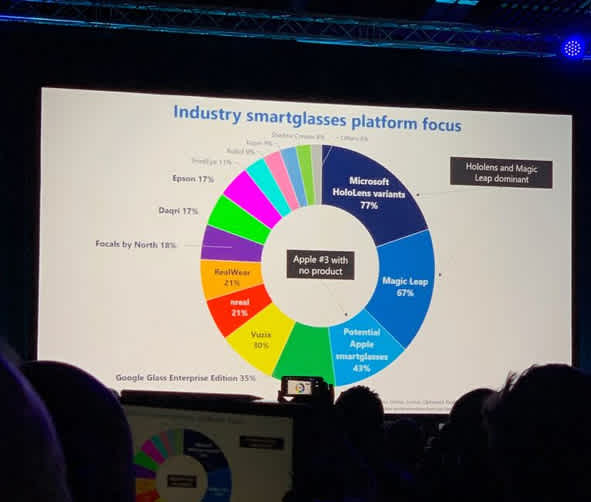

Don: For me I was surprised by how many of the top exhibitors from previous AWE conferences are no longer in business. It tells me that we are still in a churn. The industry is still in a nascent Wild West phase where anything goes; the players making the most hype today may not necessarily be the ones with the longevity for tomorrow. Also, we talked about the accelerated commoditization of cheap hardware and, to me, that means that the advantage going forward is not really going to be the hardware, but really the whole platform and ecosystem. I think that really puts the competitive advantage into the hands of companies that have a lot of experience building out and making platforms successful—like Apple, Google, Microsoft and, to some extent, Amazon and Facebook. It's one thing to create hardware that works, but it's another thing to create the entire healthy ecosystem of developers, distribution, consumer friendliness, operating system, and efficient API's that make developers do amazing stuff with very little effort. That takes years to build and it's going to be interesting to see which of these traditional platform holders—and I'm looking specifically at Apple—are going to be able to translate some of that institutional knowledge and intellectual property into building out (very quickly) a successful platform for AR wearables. Already we see Apple laying the foundations for native spatial app development—especially with the recent WWDC announcement around the RealityKit and Reality Composer—which brings me to new concerns or red flags about AR and VR this year; the game still could very much change depending on what these traditional platform holders do and if and how they successfully decide to go in full force with augmented reality. And I think the rest of the industry, whether or not they say it, feel that. That's evident in that Digi-Capital survey that was done about the kind of wearable platforms that people are interested in—Apple was like number three on the list and they don't even have an AR wearable right now.

Mike: Or that’s been announced whatsoever.

Don: Yes, exactly. So the game could be completely different a year from now, which keeps things interesting, but it also is tough to invest in and figure out—both from a business perspective and a development perspective—AR in the meantime. One more thought is, until wearable AR gains traction, I think ARkit and ARcore apps are simply going to be the most accessible way to deploy AR experiences for the foreseeable future. And with ARKit3, which was also recently announced at WWDC, we’re going to be able to see increasingly sophisticated mixed reality experiences on the mobile phone as well.

Slide from AWE showing Apple ranked #3 most important smartglasses platform despite having no product on the market

Mike: Yeah, that's definitely where it is currently and where it will be for some time. I guess it’s sort of telling that, at the conference, we saw very little actual handheld AR booths. Everybody's focus was on either headworn AR experiences, the Looking Glass displays, and other ways to interact with AR. Another thing that was really interesting to me is when they asked the people in the audience to raise their hands based on the number of years that they had been attending AWE. This was AWE’s 10th year, and the number of people who said that this was their first conference was huge. It was by far the majority of the audience, which shows that there is a lot of interest. My understanding is that a lot of it is due to various companies now interested in AR sending their reps to these conferences to learn more about it. So, while last year there were a lot more insiders (people who do this for a living or have a passion about it), this year it seemed like more people with a general interest in AR filled out the audience.

Don: I do think that what we saw, with more than half the audience raising their hands saying that this was their first AWE, is a good sign. And I know that they were intending for that to be interpreted as such, but the conference did not seem to be twice as big as the last one. So it also could be taken as an anecdotal sign that the churn and turnover is still going, and that a lot of the players that were in AR in previous years may have left due to the prolonged nature of the trough of disillusionment.

Mike: Yeah, definitely.

Don: The other concern or red flag is that (and this is more from a philosophical and business perspective) it seems like the technology is getting far ahead of privacy concerns. Every industry and every vertical is going to have to deal with that in their own way, according to their own requirements. What do you do when you live in a world where everyone is wearing smart glasses with smart cameras—potentially driven by image recognition and machine learning and artificial intelligence—absorbing and interpreting the background and the context around the wearer? And how are you going to deal with those kind of commonplace devices in a world where businesses or governments need a lot tighter privacy, but we increasingly depend on those devices to function? We run into those issues with smartphones today, but if smart glasses start to become central and indispensable to our everyday life in developed nations, we're going to really wrestle with that conflict.

Mike: Yeah, we're going to have a huge amount of the population wearing an array of cameras, surveying the environment around them, constantly taking images and pushing them up for analysis. So what sort of world is that going to be where, any time that you're out in a public space or sharing a space with anyone wearing these smart glasses, there's constantly going to be images of your environment that are being captured?

Don: And which corporations will eventually have a total encompassing observation of the entire world based upon this networked array of cameras across their entire user base? How do we deal with that?

Is there any particular news you think would be relevant to the broadest group of people?

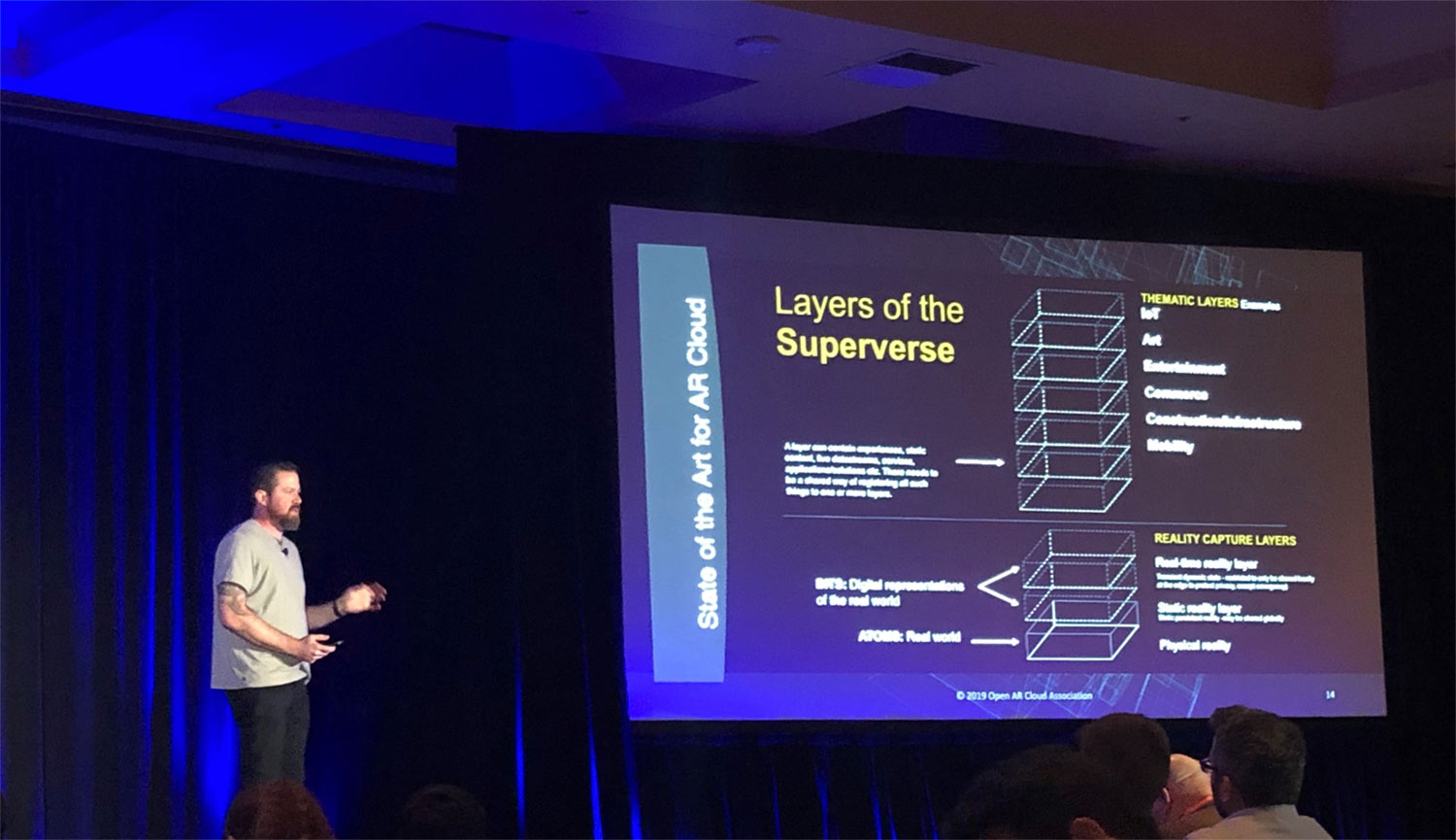

Mike: I think that there was a lot of interest around AR Cloud. This idea of grabbing an environmental understanding that allows for content to be placed in the world using these anchor points, based on the image processing—I think that's going to be a major component of AR experiences going forward. It needs to be able to map content onto buildings, faces, or different features in the environment, but it also needs to know your exact location (using GPS) and where you are looking in order for the AR content to be relevant to you. There's a lot of companies jockeying for a position to develop this AR Cloud understanding. There’s going to be a lot of interest over that, and then a lot of discussion over property ownership rights. Is this AR Cloud going to be open so that anyone can use and access it, or is it going to be privately held by certain companies? Are we going to tie property rights in an AR space to physical property rights, so when someone owns a building they have some sort of right to that AR content that gets projected over it? Or not? These discussions are going to become mainstream shortly, and I think it will be interesting for people to keep their eyes on that.

Don: I think the general populace is going to have to have a much greater level of fluency with 3D content and data. Right now, everyone from kids to adults have a decent level of fluency around two-dimensional image formats, whether it be jpegs, gifs, or just photos that you take with your camera. But it's going to be interesting to see how the general populace will embrace and adopt an understanding of 3D content—whether it be spatial scans of objects like your face, or environments like your bedroom—and how much they will need to learn (if any) in order to effectively use it, whether it be for professional use, social media, or whatever.

Mike: Yeah, that's a really good point. There's an interesting question here about AR and VR being the next form of human computer interface; these objects appear to be physical, and you can interact with them with your hands in ways similar to how we're used to interacting with actual objects. So if companies get that sort of interface right, and we could interact with these digital objects in ways that make intuitive sense to us, it might actually make it easier to interact with them. There will be less of a learning curve than there is for other interfaces like a mouse and keyboard, or even a touch screen on a phone. So, I wonder whether there will actually be a need for on-boarding and having a deeper understanding, or if there is simply going to be another layer of abstraction where people have less understanding of how the digital content is different than the physical content because they will be able to interact with it in familiar ways. So it's interesting to see how that will play out.

Don: Mike, as always, I really enjoy trying to peer into the future with others who are curious about where these technologies will go, and how they will transform the way we go about our everyday life and work. Thanks for the great conversation! I look forward to our continued exploration of these topics.

Mike: Thanks so much, and I'm already looking forward to next year's conference. It's hard to imagine, given the gap between the last two years, what the next year is going to be like. It's exciting times!