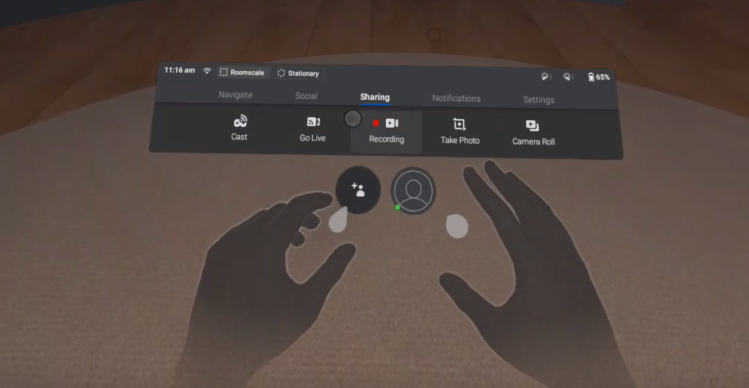

Over the past week, Oculus has been rolling out a software update to its popular Quest VR headset that allows for hand tracking. With hand tracking, a person can use their actual hands as controllers in virtual reality.

Hand Tracking in the Oculus Quest is Here

Our immersive app developers Mike Harris and Yash Bangera dove into the beta offering from Oculus. Here are their impressions, and some unnecessary hand puns.

Mike: As a baseline, I was impressed with the accuracy Oculus was able to achieve running hand tracking on the Quest's Snapdragon 835 processor. The finger tracking was surprisingly accurate, even in edge cases such as viewing the hands side-on or in weird positions occluding one or more fingers.

Yash: Yeah, I was impressed at first too, but it felt really clunky as I used it more. Even if one finger from the other hand overlapped, it would lose tracking of both hands.

Mike: That was my experience as well. Although the tracking of individual hands was surprisingly robust, the system really had a hard time with occlusion between the hands (where any parts of the hands overlap from the perspective of the cameras). Oculus’ UX solution to this is to have the hands disappear any time tracking is unreliable, which actually seemed to work pretty well. I remember early hand tracking solutions (e.g. the Leap Motion Controller) would have similar issues, but the hands would just kind of “freak out” and rapidly switch between inaccurate poses whenever there was occlusion.

Yash: Ahh! That makes sense, so they didn’t want to do any sort of prediction? Yeah, the Leap Motion was annoying from time to time when you were trying to do something, and suddenly your hand would be upside down. The individual finger tracking in Oculus is also SO much better than what it was with the Leap Motion.

Mike: I also noticed a substantial improvement in the FOV (field of view). Previous hand tracking solutions were restricted to an area in front of the user, but it seems like Oculus’ solution was able to maintain tracking all the way out to the edge of my vision in the headset, which was impressive.

Yash: Yeah! I noticed that too. I tried to keep my hands as far as possible to see how wide I could go without losing tracking, and it was REALLY good. The problem is that there are a lot of interactions where the user will want to use their hands in a virtual environment without them being directly in front of them. So, if I am holding something, and I look away and come back, I would want the app to remember that the object was being held so that I could resume moving it around.

Mike: That’s a good point and leads to the question, what are the use cases for this type of input? As a developer, I’ve always struggled with finding the right type of interactions for hand tracking in VR, it’s significantly harder to find an input paradigm that feels comfortable to users when we don’t have the accuracy of discrete button presses and reliable controller tracking.

Yash: Yeah, you’re right! I think having your hands in VR is going to be really intuitive for first-time VR users.

Mike: This is definitely Oculus’s attempt to broaden the accessibility of VR to a wider user base. Plus, the immersiveness of optical hand tracking in VR just feels cool.

Yash: I agree. BUT, I feel hand tracking should only be used for an app/game that has some sort of a console in front of the user that they can “physically” interact with. I did not like the pinch-to-select mechanism in there.

Mike: Yeah, the pinch mechanism was weird. There was this little cone floating in space between your thumb and forefinger and when you pinched them together, it squeezed the cone, which then acted like a laser pointer that you could use to make selections on a distant screen by touching your thumb to your finger. I mean, it worked, but it seemed like a really odd choice on Oculus’s part; I’ve got hands in VR now, let me touch stuff!

Yash: Haha! Yeah, I think, because it's just in beta, they didn’t want to mess around with the Oculus home UI. They would have had to have made a ton of more changes instead of just adapting a controller pointer and converting a click to a finger pinch.

Mike: That seems right, although, at the talk on hand tracking at Oculus Connect a few months ago, they made a big point about the importance of the pinch interaction providing for “self-haptic feedback.”

Yash: Wasn’t the pinch interaction tried and tested with the Hololens though? I never liked it there either, is that how you feel too? I think the average consumer would prefer to just touch stuff in VR instead.

Mike: I agree, though as you pointed out, this is a totally different interaction paradigm than the “laser pointer” VR UI interactions that have become ubiquitous—perhaps it will just take time for developers to catch up now that hand tracking has been incorporated into what is arguably the most popular mainstream VR headset. Which leads me to my last question, what are the use-cases for hand-tracking based VR applications versus controller-based apps that have defined VR for the past few years?

Yash: I doubt there will be good solutions for using hand tracking in games any time soon. I feel like hand tracking would be really useful for more serious applications of VR.

Mike: Yeah, I think that’s right. The advantage of hand tracking, at this point, is that it makes VR more accessible. I know we’ve both felt the pain of doing a VR demo with someone who hasn’t experienced VR before—trying to fumble through showing them how to properly use a VR controller. I think it makes sense that the primary use cases for hand tracking will be simple, non-gaming interactions for users that don’t have a lot of experience with VR or video game controllers.

Yash: Yeah! That has happened so many times. And this is cool, especially for the development that we do— not just because of the accessibility, but also for the cool factor of having your hands in VR.

Mike: Yeah, I suppose only time will tell once more people have had a chance to try it out for themselves. I guess we’ll just have to wait for more hands-on impressions.

Yash: I see what you did there. I guess we have to be more hands-on to get the upper hand on others.

Mike: I’ve got to hand it to you. That was an underhanded way to get the leg up on my comment.

Yash: I just don’t want to overplay my hand with this. Can you lend me a hand when I need it?

Mike: That was hands down the best pun so far, and that’s a handy rule of thumb.

Thanks to Mike and Yash for sharing their thoughts about Oculus Quest hand tracking. As you can see, the conversation got out of hand at the end.

Oh great, now they’ve got me doing it, too.